This week investors poured another $250 million into Cerebras Systems. The reason is that AI projects lean on GPUs which have about 54 billion transistors. Cerebras Systems' chip, the WSE-2, includes 2.6 trillion transistors that the startup says make it the "fastest AI processor on Earth."

It uses WSE-2 stands for Wafer Scale Engine 2, a nod to the unique architecture on which the startup has based the processor.

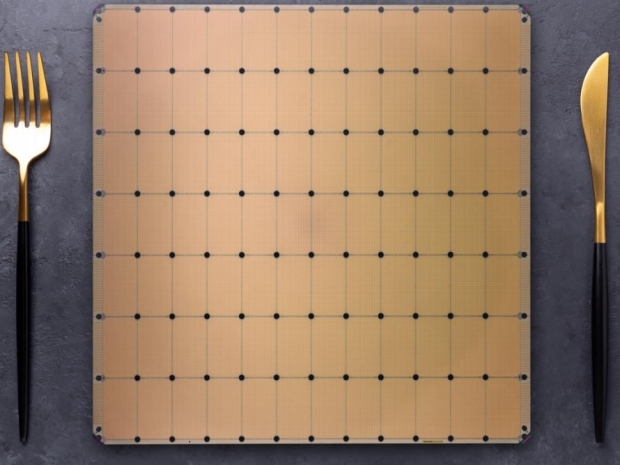

The typical approach to chip production is carving as many as several dozen processors into a silicon wafer and then separating them. The startup carves a single large processor into the silicon wafer that isn't broken up into smaller units.

So the 2.6 trillion transistors in the WSE-2 are organised into 850,000 cores.

Cerebras Systems says that its WSE-2 has 123 times more cores and 1,000 times more on-chip memory than the closest GPU.

To match the performance provided by a WSE-2 chip, a company would have to deploy dozens or hundreds of traditional GPU servers. With the WSE-2, data doesn't have to travel between two different servers but only from one section of the chip to another, which represents a much shorter distance. The shorter distance reduces processing delays. Cerebras Systems says that the result is an increase in the speed at which neural networks can run.