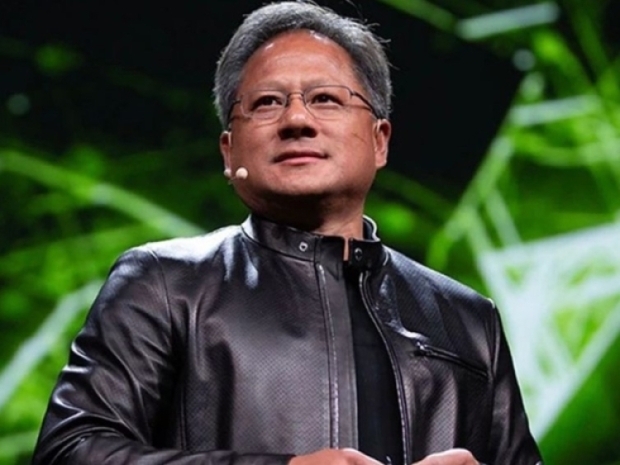

Talking to TechCrunch Huang said: "Our systems are progressing way faster than Moore’s Law.”

Moore's Law, coined by Intel co-founder Gordon Moore in 1965, predicted that the number of transistors on computer chips would roughly double every two years, effectively doubling performance.

While this worked for a while, the pace of Moore's Law has slowed in recent times as chips became too small.

Huang asserts that Nvidia’s AI chips are advancing at a much faster rate. The company states that its latest data center superchip is more than 30 times faster for running AI inference workloads than its previous generation.

"We can build the architecture, the chip, the system, the libraries, and the algorithms all at the same time, If you do that, then you can move faster than Moore’s Law, because you can innovate across the entire stack," he claimed.

Huang previously suggested the industry might be on pace for what he calls "hyper Moore’s Law."

He said there were three active AI scaling laws: pre-training, post-training, and test-time compute.

"Moore’s Law was so important in the history of computing because it drove down computing costs. The same thing is going to happen with inference where we drive up the performance, and as a result, the cost of inference is going to be less."

During his keynote, Huang showcased Nvidia’s latest data center superchip, the GB200 NVL72, emphasizing its capabilities. This chip is touted to be 30 to 40 times faster at running AI inference workloads than Nvidia’s previous best-selling chips, the H100, potentially lowering costs for AI models that require extensive compute resources during inference.