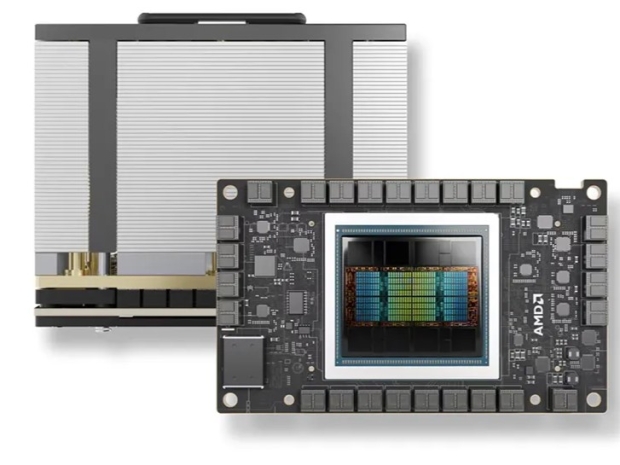

According to Techradar the Radeon Instinct MI300X stands tall on the shoulders of AMD's third-generation CDNA architecture, harnessed by TSMC's cutting-edge 5nm and 6nm processes.

Its credentials read like a symphony of power: 19,456 stream processors, a colossal 192GB of HBM3 memory, 304 compute units, 1,216 matrix cores, and a formidable 750-watt TDP.

The MI300X's OpenCL benchmark was 379,660 —a commanding lead over the RTX 4090's modest 320,000 score. Geekbench's trials unfolded on a Supermicro AS-8125GS-TNMR2 system, graced by an AMD EPYC 9754 CPU.

But that's not all. The MI300X dances across benchmarks like a virtuoso:

- Background Blur : 435.2 images/sec

- Face Detection : 279.7 images/sec

- Horizon Detection : 18.4 Gpixels/sec

- Edge Detection : 42.4 Gpixels/sec

- Gaussian Blur : 33.7 Gpixels/sec

- Feature Matching : 1.28 Gpixels/sec

- Stereo Matching : An astonishing 1.59 Tpixels/sec

- Particle Physics : A blistering 74,430.6 FPS

The MI300X, battle-hardened for data centres, AI applications, and Herculean computing tasks, competes against Nvidia's H100. Meanwhile, the RTX 4090, tailored for gamers and creative maestros, takes a different approach.

While the RTX 4090 beckons consumers with a $1,700 price tag on Amazon, the MI300X costs more than $15,000. Choosing between the sublime and the stratospheric awaits those seeking computational supremacy.