The chip is dedicated to solving machine learning problems in artificial intelligence and could lead to dramatic changes in the way deep learning networks are devised, at least that is what it tells us.

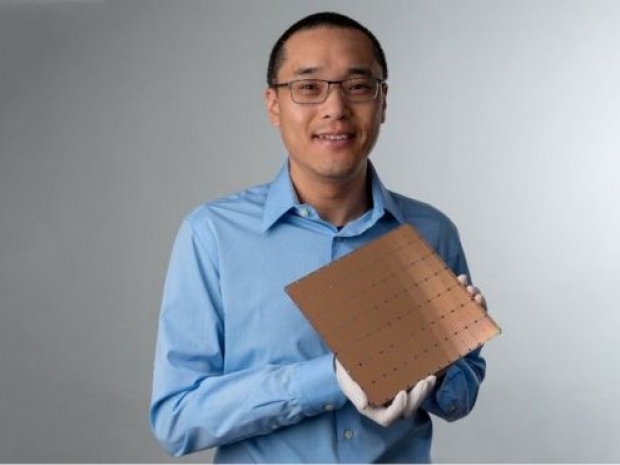

Cerebras's "Wafer-Scale Engine" takes up almost all the area of a 12-inch silicon wafer, making it 57 times the size of Nvidia's largest graphics processing unit.

But that opens shedloads of design problems about how you create a coherent system functioning across a wafer 12 inches in diameter that is usually meant to be cut into multiple parts for individual chips.

The wafer is organised into 84 tiles or bricks, as you might call them, and if one of them is bad, you need to have software to isolate that so it doesn't affect the performance of the whole wafer.

Only about 1- 2 percent of the wafer area is unusable in any wafer run.

Another problem in the past was that there were not enough metal wires to interconnect the various sections of the wafer, explains Lamond.

There are other innovations in hardware over and above the basic fabrication breakthrough, notes Lamond. Cerebras developed a way to detect, in hardware, zero-valued elements in a neural network to avoid computing them.

"It was a very astute development to discover the zeros in hardware, so you don't use software to do it. It saves cycles. That was a very clever development beyond the silicon development", the outfit said.

Another challenge is cooling the chip and connecting it to the outside world are an additional challenge.

There are heat challenges of a chip that runs at 15 kilowatts and consumes a tremendous amount of power, and you have to cool it reliably.

Cerebras has developed an elaborate network of pipes to carry water to effectively irrigate the WSE but this means that it has to sell as a complete computing system for machine learning, rather than selling chips as Nvidia and Intel do as "merchant" semiconductor suppliers.