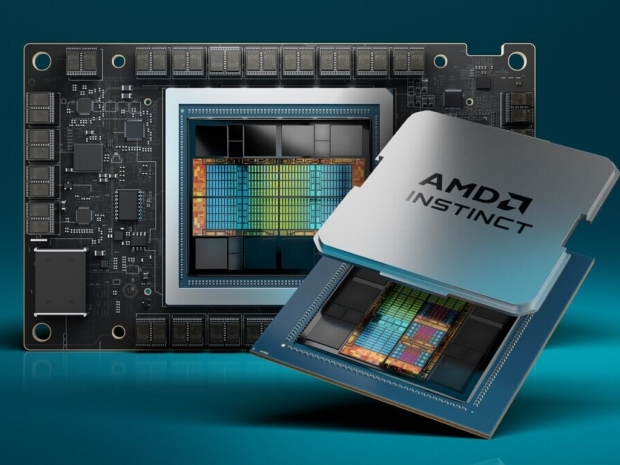

AMD forged its MI300 lineup using the most advanced production technologies ever pressed into mass production, employing new techniques like its ‘3.5D’ packaging to produce two multi-chip behemoths that it says provide Nvidia-beating performance in a wide range of AI workloads. AMD isn’t sharing pricing at this point but it did say that these are now shipping to a wide range of OEM partners.

The AMD Instinct MI300 uses a specific design, employing what AMD calls 3.5D packing with a total of 13 chiplets. Overall, the chip packs 153 billion transistors, which is an impressive feat. When it comes to performance claims, AMD says it delivers up to 4X more performance than Nvidia’s H100 GPUs in some workloads as well as 2x the performance per watt.

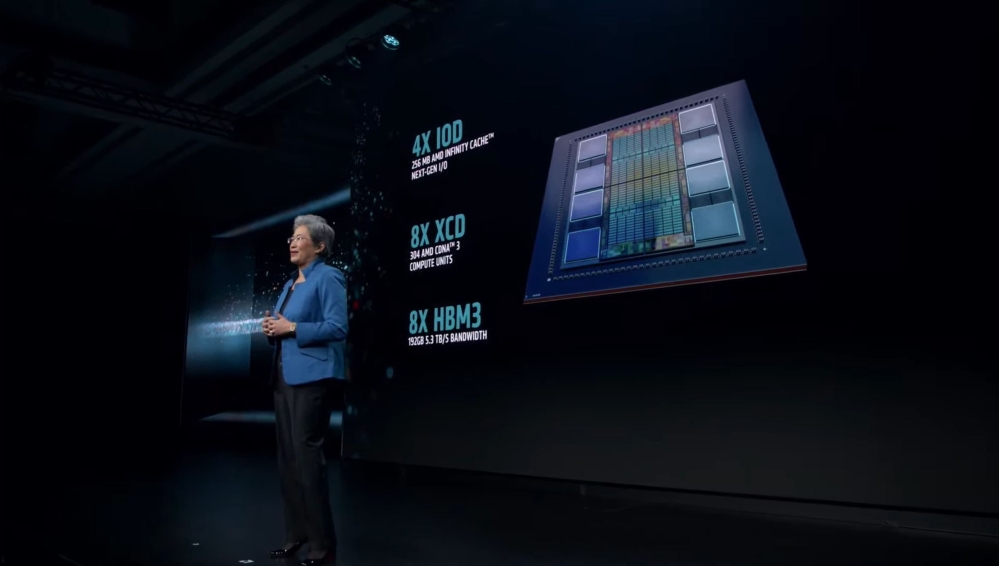

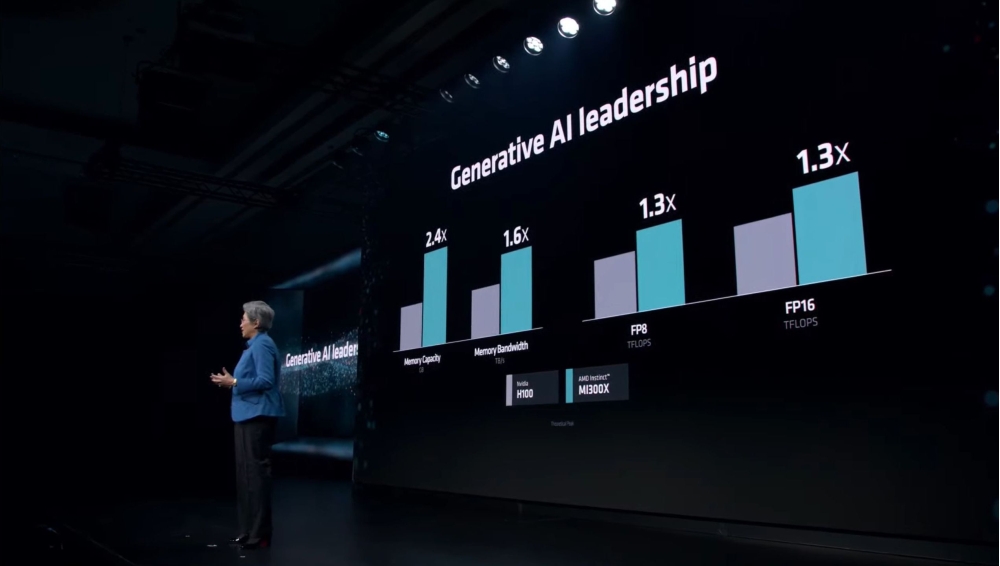

The AMD Instinct MI300X Accelerator, as it is called, uses eight physical stacks of 12Hi HBM3 memory, combining it with eight stacks of 5nm CDNA 3 GPU chiplets, now called XCDs, or Accelerator Complex Dies, and four 6nm I/O dies. This leaves the MI300X with 192GB of HMB3 memory at 5.3TB/s of bandwidth, 304 compute units, as well as 256MB of Infinity Cache that is used as a shared L3 cache. It is meant to be used as the AMD Instinct MI300X platform with eight of those Instinct MI300X accelerators, offering 10.4 PF (PetaFLOPS) of FP16 compute performance and packing 1.5TB of HBM3 memory over 896GB/s Infinity Fabric interconnect. Compared to Nvidia's H100 HGX platform, AMD claims up to 2.4x more memory and 1.3x higher computational performance.

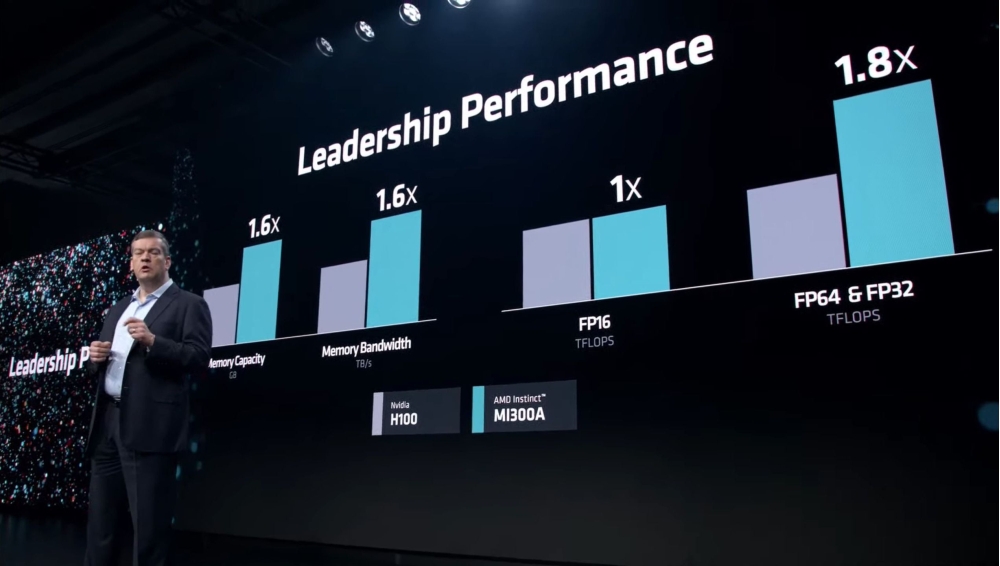

The AMD Instinct MI300A, described as the first data center APU, combines both the CPU and the GPU, and it is meant to compete with the likes of Nvidia's Grace Hopper. The MI300A is already coming to El Capitan supercomputer, which is an impressive win for AMD.

The MI300A uses pretty much the same design idea as the MI300X but switches the three 5nm XCDs with three CPU Comptex Dies, or CCDs. This leaves it with a total of 24 x86 Zen 4 CPU cores and 228 AMD CDNA 3 compute units. It also cuts down the memory capacity by using eight 8Hi HBM3 stacks, leaving it with 128GB of memory, but still the same 5.3 TB/s of memory bandwidth.

AMD also claims significant performance improvements over Nvidia with MI300A as well, but we'll see when it gets compared in real-life applications and the Nvidia Grace Hopper GH200 Superchip.

As said earlier, AMD is not sharing any price details but did say that these chips are now shipping to partners.